The digital landscape is witnessing a remarkable transformation with the highly anticipated rollout of OpenAI’s latest LLM plugin offerings. These groundbreaking plugins promise to revolutionise how we interact with language models and artificial intelligence, fostering innovative applications and possibilities in various domains.

As developers from all corners of the globe eagerly embrace this new era, OpenAI’s LLM plugins unlock countless opportunities for enhancing user experiences, streamlining workflows, and elevating the capabilities of AI-driven systems. The potential for growth and innovation is immense, making this a thrilling time to be involved in the world of language model plugin development.

Integrating OpenAI’s LLM Plugins with External Apps and APIs

OpenAI’s LLM plugins are designed to work harmoniously with a diverse array of external apps and APIs, paving the way for remarkable synergy and enhanced functionality. By bridging the gap between the LLM system and third-party applications, these plugins empower developers to create bespoke solutions that capitalise on the strengths of multiple platforms.

In this seamless integration process, LLM plugins act as the connective tissue that facilitates data exchange and communication between the language model and the external apps or APIs. This interaction enables developers to harness the power of OpenAI’s LLM system in conjunction with other tools and services, creating enriched, multi-faceted applications.

For instance, a developer might create a plugin that combines the content generation prowess of OpenAI’s language models with a popular project management app. This collaboration could streamline content workflows, allowing users to generate relevant text snippets or project updates within the app, ultimately enhancing productivity and user experience.

The possibilities for such integrations are virtually endless, limited only by the developer’s imagination and ingenuity. By fostering seamless collaboration between OpenAI’s LLM plugins and external apps or APIs, developers can unlock new horizons of innovation and functionality, truly redefining the boundaries of artificial intelligence applications.

Delving into the Technicalities: Integrating with OpenAI’s LLM System

To successfully integrate with OpenAI’s LLM system, developers need to have a solid understanding of the technical aspects involved. In this section, we’ll explore the fundamental components and steps required for seamless integration with the LLM system.

API Key and Authentication

To begin, developers must acquire an API key by creating an account with OpenAI. This API key is essential for authentication and enables secure access to the LLM system. Typically, API keys are included in request headers, ensuring that only authorised developers can interact with the platform.

Crafting API Requests

With the API key in hand, developers can start crafting API requests to interact with OpenAI’s LLM system. These requests may include actions such as generating text, fine-tuning models, or retrieving information about available models. API requests must adhere to the platform’s guidelines and use the appropriate endpoints, methods (GET, POST, etc.), and parameters.

Handling API Responses

Once an API request is sent, the LLM system processes the request and returns a response. Developers must design their plugins to handle these API responses effectively, typically by parsing JSON data and extracting relevant information. Efficient handling of API responses is crucial for a smooth user experience, ensuring that the plugin displays accurate and timely results.

Error Handling and Rate Limiting

Robust error handling is a vital aspect of any plugin integration. Developers should account for potential issues, such as incorrect API requests or server-side errors, by implementing appropriate error handling mechanisms. Additionally, OpenAI’s LLM system enforces rate limiting to ensure fair usage. Developers must design their plugins to respect these limits, managing requests accordingly to avoid throttling or temporary suspensions.

Ensuring Security and Compliance

Lastly, developers must prioritise the security and privacy of users’ data when integrating with OpenAI’s LLM system. This includes implementing secure data storage and transmission methods, as well as adhering to relevant data protection regulations.

By mastering these technical components, developers can ensure a seamless integration with OpenAI’s LLM system, ultimately delivering exceptional functionality and value to users through their plugins.

Here’s a link to their tech spec. https://beta.openai.com/docs/

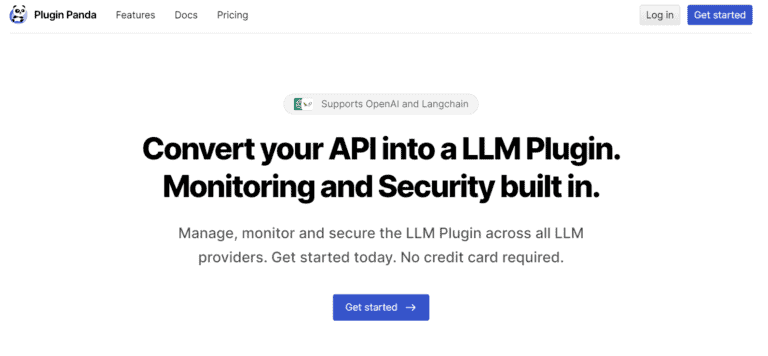

Introducing Plugin Panda

A Comprehensive Guide to Simplify LLM Plugin Creation and Deployment

Welcome, dear reader! Today, we’ll embark on a thrilling journey through the marvellous world of Plugin Panda. Plugin Panda is your all-in-one accomplice, designed to make LLM plugin creation, deployment, monitoring, and security an absolute breeze. Whether you’re a seasoned developer or a wide-eyed newcomer, Plugin Panda will be your trusty companion in crafting top-notch plugins for OpenAI and Langchain.

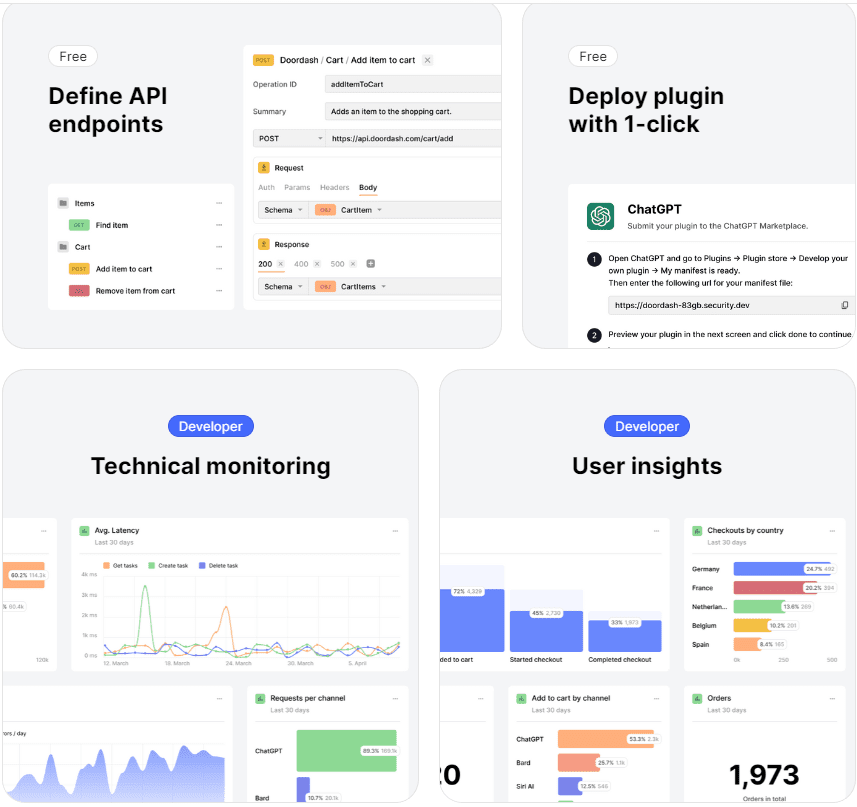

In this splendid article, we’ll outline the essential steps to manage your LLM plugins using Plugin Panda, including defining API endpoints, deploying your plugin with a single click, and ensuring its security and performance with our built-in tools.

What Awaits You in the Realm of Plugin Panda

Delve into the treasure trove of knowledge that awaits you in Plugin Panda’s documentation:

- Getting Started: Acquaint yourself with Plugin Panda’s user interface and features by creating an account.

- Basic Settings: Customise and manage your LLM plugins with Plugin Panda’s essential configuration options.

- API Endpoint Creation: Learn the art of defining API endpoints and ensuring compatibility with LLM platforms.

- Deploying Your LLM Plugin: Master the one-click deployment process for launching your plugin into the digital stratosphere.

- Monitoring and User Insights (Coming Soon): Unleash the power of Plugin Panda’s analytics tools for performance optimisation and user behaviour insights.

- Security and Threat Protection (Coming Soon): Fortify your plugin with proactive security measures, including threat protection, security alerts, and customisable traffic controls.

- FAQs: Find clarity in the labyrinth of Plugin Panda knowledge with answers to the most

The Allure of Plugin Panda

Plugin Panda offers a seamless and delightful experience in managing LLM plugins from inception to deployment, and beyond. Our platform streamlines the entire process, allowing you to focus on the truly important task: crafting a remarkable plugin that caters to your users’ needs. With built-in monitoring and security features, Plugin Panda equips you with the tools required to ensure your plugin’s reliability and safety.

As you navigate this article, you’ll uncover the myriad advantages of utilising Plugin Panda for your LLM plugin development. You’ll soon discover how simple it is to get started. So, without further ado, let’s dive in and build something extraordinary together!

To Conclude

Plugin Panda is a truly delightful tool that simplifies the LLM plugin creation, deployment, monitoring, and security process. By focusing on a user-friendly experience and robust built-in features, Plugin Panda empowers developers to concentrate on building exceptional plugins for their users. So go forth, explore the fantastic world of Plugin Panda, and create something genuinely brilliant!